Tourism recovery mode

It’s wonderful to hear that LinkedIn Editors invited me! Pursuing a passion is an incredible thing. At this time, I have been invited by Mitchell Van Homrigh to contribute about how tech can solve tourism pain points. Below, you can find the points that woke up my interest.

Like many others, the tourism sector has been significantly impacted by the COVID-19 pandemic (it looks like that pharmaceutic ambition planned to destroy everything in its path, with the unique intention to get a couple of millions of dollars more in its revenue, another discussion for late). As we move towards recovery, technology could play a crucial role in reviving the industry. From my perspective, as a Data Management Engineer, I would like to highlight two things. Virtual Reality and Data Analytics.

- Virtual Reality (VR) Tours: The airline industry and people are recovering their health and “boom” stamina. For those planning tourism to any country or airport, VR tours can provide immersive experiences. The idea is to offer a VR tour of the destination airport, along with information on what to do before or after disembarking or boarding, such as where to go, what documentation is required, and emergency services information. When travelling to another country, it’s easy to feel lost because you don’t know how far the connection flight or migration/customs procedure is. VR tours can help passengers be more agile and reduce their time in the airport, improving the customer experience.

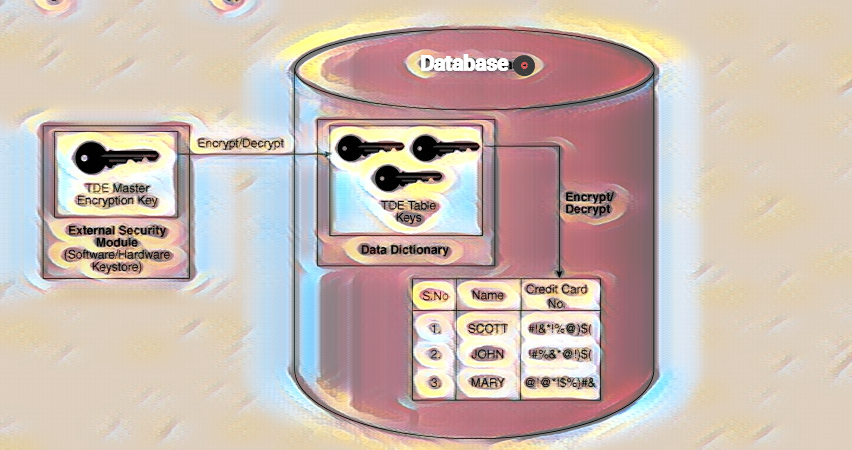

- Data Analytics: Tourism businesses can gain insight into customer behaviour and preferences by utilising data analytics to create more personalised experiences. This can lead to various benefits, such as predicting demand, improving pricing availability, and optimizing inventory for financial optimisation. In fact, a study showed that nine hotels experienced a 22% average increase in revenue after implementing pricing optimisation. Additionally, data analytics can inform funding decisions and shape business development based on detailed user preferences. By identifying potential customers at different stages of the trip planning process, analytics can help businesses target specific groups. Finally, data analytics can improve marketing campaign effectiveness by identifying the best channel to reach customers.

The innovative concept of virtual reality tours can potentially transform how we explore airports and destinations. In a world recovering from a pandemic, these immersive experiences offer a much-needed solution for travellers seeking a hassle-free and efficient journey. Imagine arriving in a new country and embarking on a virtual reality tour that guides you through customs procedures, connecting flights, and emergency services. This game-changing technology has the power to revolutionise the travel industry and create an even more satisfying experience for customers.

Regarding data, Analytics acts as a compass for tourist companies, guiding them in the right direction. By harnessing the power of data, businesses can gain valuable insights into customer behaviors and preferences, allowing them to create personalized experiences. Data analytics is the key ingredient for achieving operational efficiency and financial success, from predicting demand and optimizing pricing to shaping business strategies based on user preferences. This is a theoretical concept and a proven technique for boosting revenue and engaging consumers.

In summary, the COVID-19 outbreak had a major impact on the tourism industry, challenging its ability to adapt and recover. Looking ahead, it is clear that technology can play a critical role in supporting the industry. As a data management engineer, I believe virtual reality (VR) and data analytics are promising game changers. In this post-pandemic era, where the race to recovery is fierce, those who leverage technology wisely will survive and thrive, delivering the best service and experiences to passengers worldwide. The future of tourism is tech-infused, and it’s time to take that leap into a brighter, more innovative tomorrow.